Products You May Like

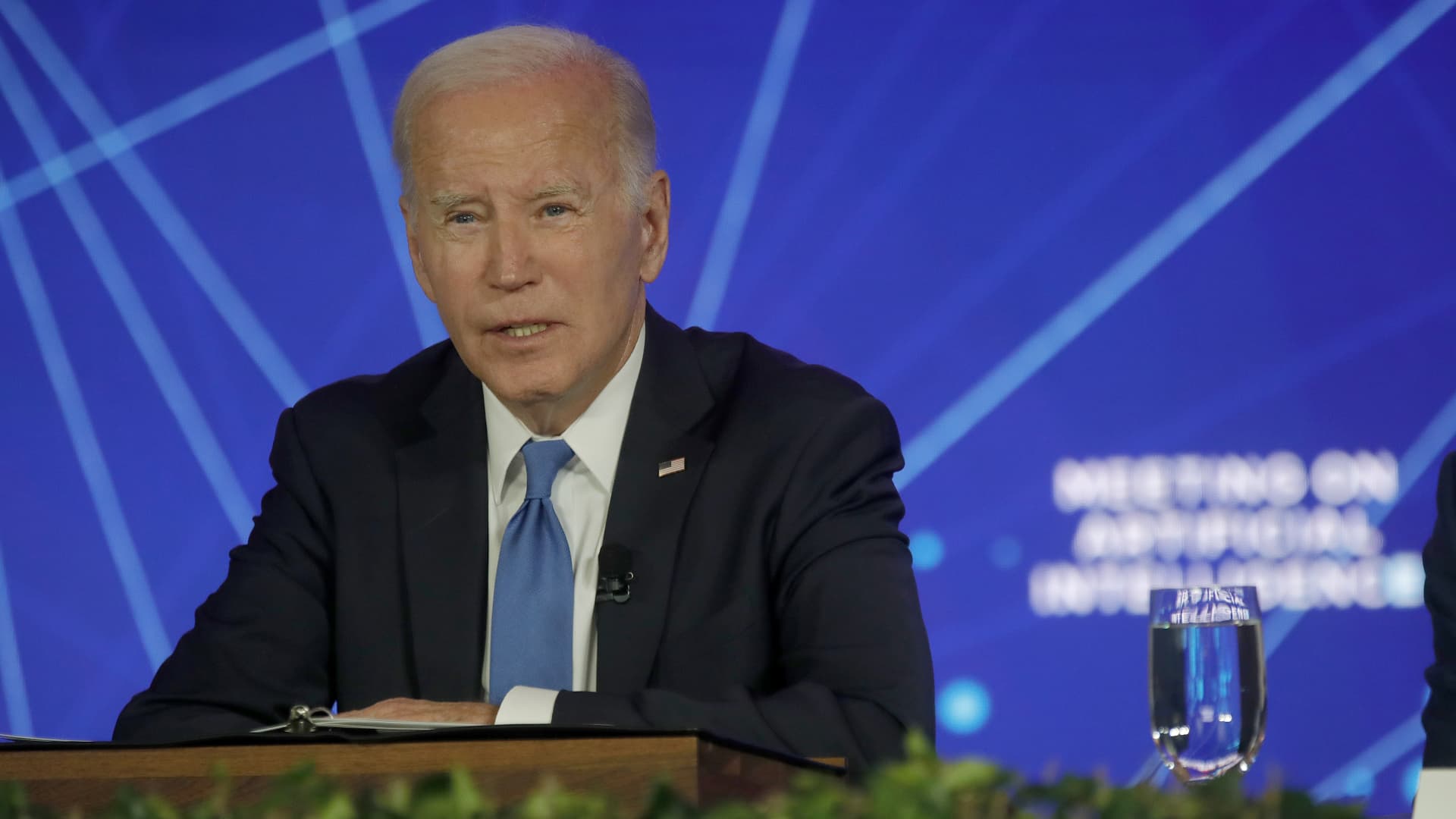

President Joe Biden unveiled a new executive order on artificial intelligence — the U.S. government’s first action of its kind — requiring new safety assessments, equity and civil rights guidance and research on AI’s impact on the labor market.

While law enforcement agencies have warned that they’re ready to apply existing law to abuses of AI and Congress has endeavored to learn more about the technology to craft new laws, the executive order could have a more immediate impact. Like all executive orders, it “has the force of law,” according to a senior administration official who spoke with reporters on a call Sunday.

The White House breaks the key components of the executive order into eight parts:

- Creating new safety and security standards for AI, including by requiring some AI companies to share safety test results with the federal government, directing the Commerce Department to create guidance for AI watermarking, and creating a cybersecurity program that can make AI tools that help identify flaws in critical software.

- Protecting consumer privacy, including by creating guidelines that agencies can use to evaluate privacy techniques used in AI.

- Advancing equity and civil rights by providing guidance to landlords and federal contractors to help avoid AI algorithms furthering discrimination and creating best practices on the appropriate role of AI in the justice system, including when it’s used in sentencing, risk assessments and crime forecasting.

- Protecting consumers overall by directing the Department of Health and Human Services to create a program to evaluate potentially harmful AI-related healthcare practices and creating resources on how educators can responsibly use AI tools.

- Supporting workers by producing a report on the potential labor market implications of AI and studying the ways the federal government could support workers impacted by a disruption to the labor market.

- Promoting innovation and competition by expanding grants for AI research in areas like climate change and modernizing the criteria for highly skilled immigrant workers with key expertise to stay in the U.S.

- Working with international partners to implement AI standards around the world.

- Developing guidance for federal agencies’ use and procurement of AI and speed up the government’s hiring of workers skilled in the field.

The order represents “the strongest set of actions any government in the world has ever taken on AI safety, security, and trust,” White House Deputy Chief of Staff Bruce Reed said in a statement.

It builds on voluntary commitments the White House previously secured from leading AI companies and represents the first major binding government action on the technology. It also comes ahead of the an AI safety summit hosted by the U.K..

The senior administration official referenced the fact that 15 major American technology companies have agreed to implement voluntary AI safety commitments, but that it “is not enough,” and Monday’s executive order is a step towards concrete regulation for the technology’s development.

“The President, several months ago, directed his team to pull every lever, and that’s what this order does: bringing the power of the federal government to bear in a wide range of areas to manage AI’s risk and harness its benefits,” the official said.

President Biden’s executive order requires that large companies share safety test results with the U.S. government before the official release of AI systems. It also prioritizes the National Institute of Standards and Technology’s development of standards for AI “red-teaming,” or stress-testing the defenses and potential problems within systems. The Department of Commerce will develop standards for watermarking AI-generated content.

The order also involves training data for large AI systems, and it lays out the need to evaluate how agencies collect and use commercially available data, including data purchased from data brokers, especially when that data involves personal identifiers.

The Biden administration is also taking steps to beef up the AI workforce. Beginning Monday, the senior administration official said, workers with AI expertise can find relevant openings in the federal government on AI.gov.

As far as a timeframe for the actions dictated by the executive order, the administration official said Sunday that the “most aggressive” timing for some safety and security aspects of the order involves a 90-day turnaround, and for some other aspects, that timeframe could be closer to a year.

Building on earlier AI actions

Monday’s executive order follows a number of steps the White House has taken in recent months to create spaces to discuss the pace of AI development, as well as proposed guidelines.

Since the viral rollout of ChatGPT in November 2022 — which within two months became the fastest-growing consumer application in history, according to a UBS study — the widespread adoption of generative AI has already led to public concerns, legal battles and lawmaker questions. For instance, days after Microsoft folded ChatGPT into its Bing search engine, it was criticized for toxic speech, and popular AI image generators have come under fire for racial bias and propagating stereotypes.

President Biden’s executive order directs the Department of Justice, as well as other federal offices, to develop standards for “investigating and prosecuting civil rights violations related to AI,” the administration official said Sunday on the call with reporters.

“The President’s executive order requires a clear guidance must be provided to landlords, federal benefits programs and federal contractors to keep AI algorithms from being used to exacerbate discrimination,” the official added.

In August, the White House challenged thousands of hackers and security researchers to outsmart top generative AI models from the field’s leaders, including OpenAI, Google, Microsoft, Meta and Nvidia. The competition ran as part of DEF CON, the world’s largest hacking conference.

“It is accurate to call this the first-ever public assessment of multiple LLMs,” a representative for the White House Office of Science and Technology Policy told CNBC at the time.

The competition followed a July meeting between the White House and seven top AI companies, including Alphabet, Microsoft, OpenAI, Amazon, Anthropic, Inflection and Meta. Each of the companies left the meeting having agreed to a set of voluntary commitments in developing AI, including allowing independent experts to assess tools before public debut, researching societal risks related to AI and allowing third parties to test for system vulnerabilities, such as in the August DEF CON competition.

WATCH: How A.I. could impact jobs of outsourced coders in India